Assistive AR

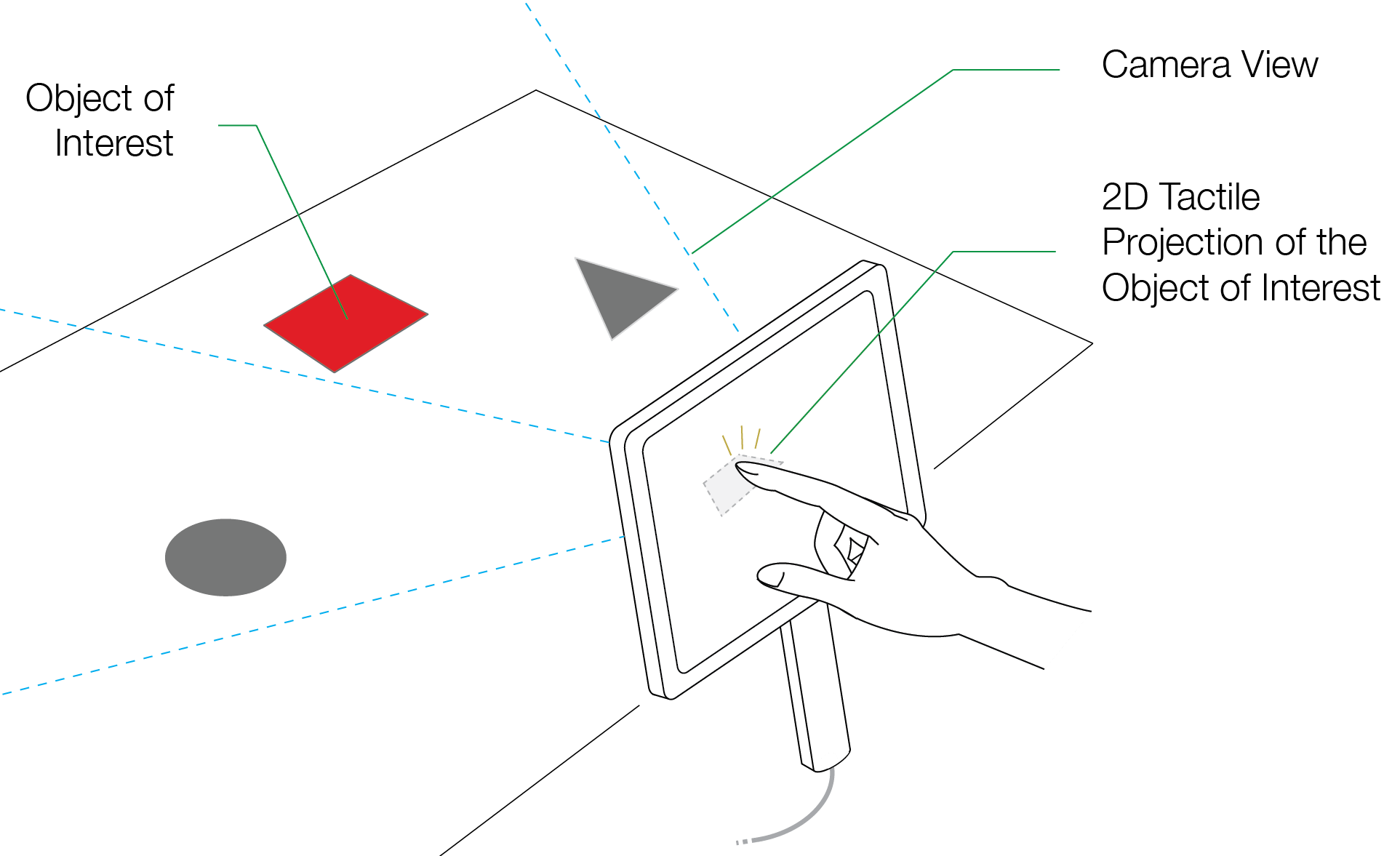

We designed a mobile, generic, and inexpensive tactile assistive device for the visually impaired. The device helps users explore the world around them, by pointing it towards objects of the environment and rendering tactile information representing objects of interest sensed by a camera.

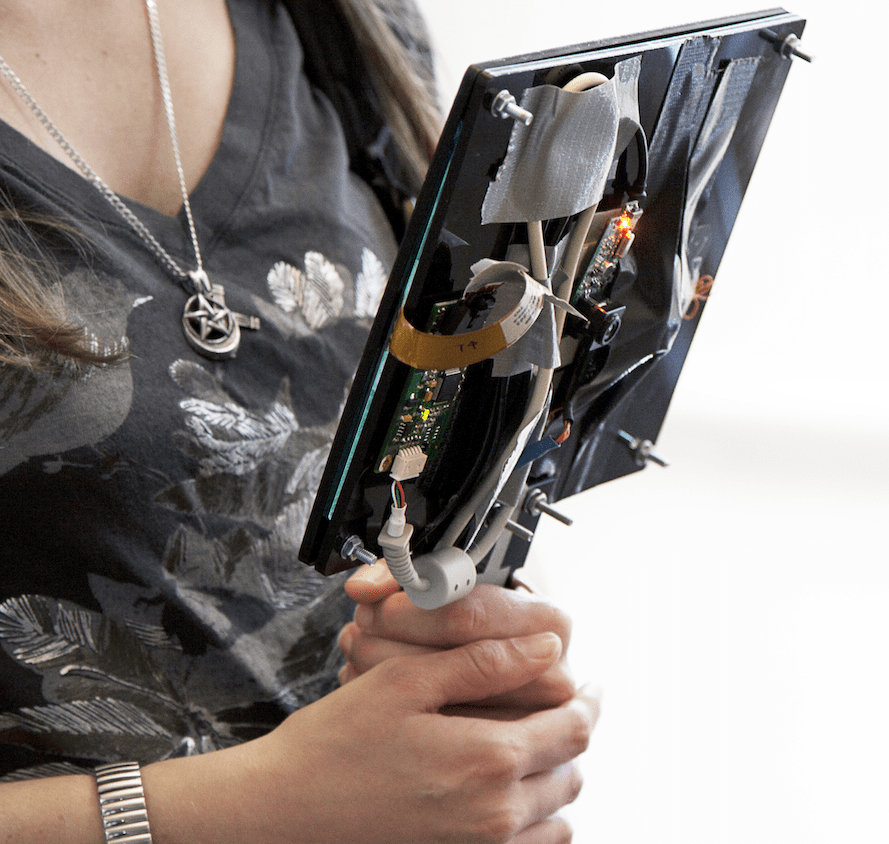

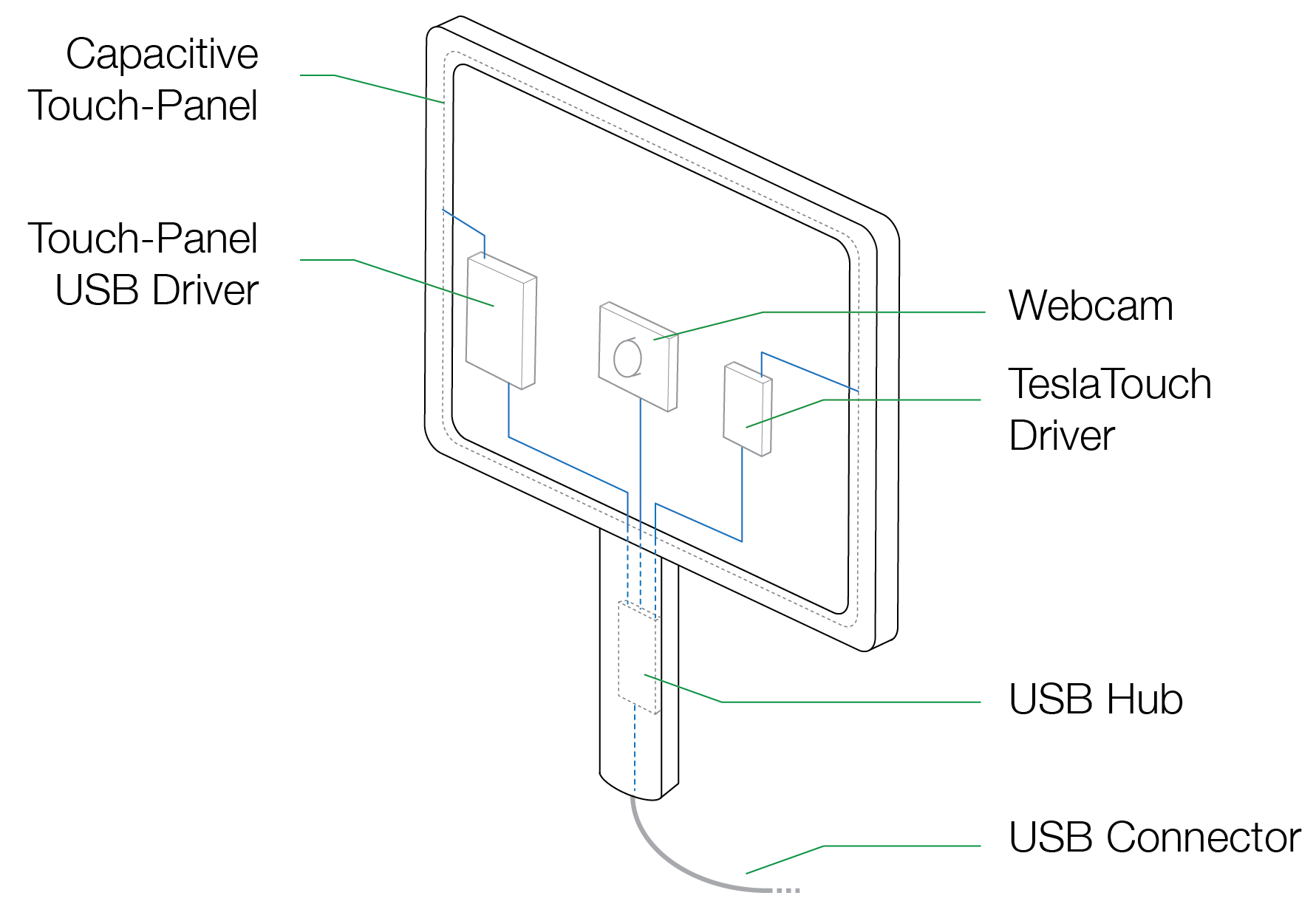

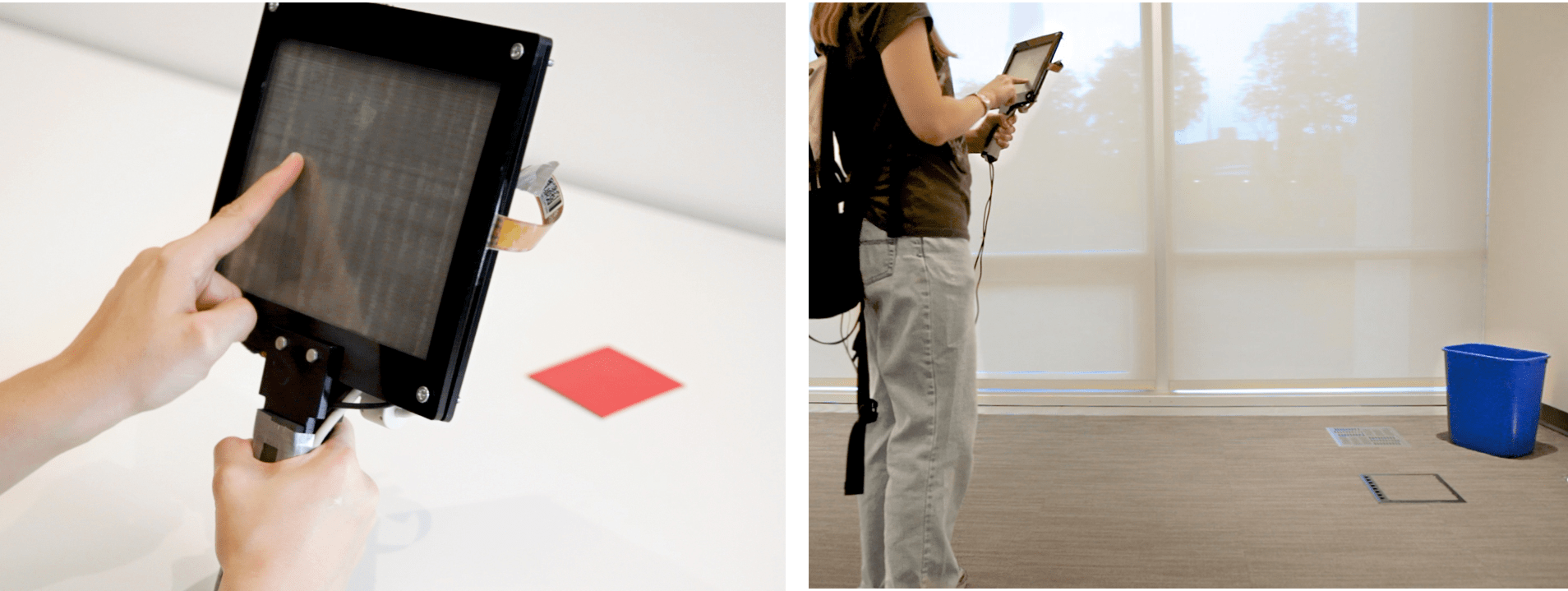

The handheld device consists of a surface capacitive touch-panel and Teslatouch technology, which enables tactile textures on the touch surface. A camera is attached on the back. All the hardware is housed in a hand mirror-shaped casing. The user holds the device with one hand while sliding the fingers on the screen.

The camera senses remote objects in the environment and the device translates them as tactile texture on the touch surface. When the user touches the 2D representation of the object on the surface, control signals are sent to the TeslaTouch hardware, rendering appropriate tactile sensations. All components are connected to a computer, where computer- vision algorithms detect preset objects of interest.

With the help of two visually impaired participants, we conducted experiments and evaluated the performance of the device in detecting, reaching and exploring tasks. Both participants were able to detect, explore and reach for a given object of interest in a controlled room setting using only the tactile information rendered on the flat panel of the device.

Publications

Ali Israr, Olivier Bau, Seung-Chan Kim, and Ivan Poupyrev. Tactile feedback on flat surfaces for the visually impaired. ACM CHI ’12 Extended Abstracts.

Cheng Xu, Ali Israr, Ivan Poupyrev, Olivier Bau, and Chris Harrison. Tactile display for the visually impaired using TeslaTouch. ACM CHI ’11 Extended Abstracts.

Team and Credits

Project done at Disney Research Pittsburgh together with Ali Israr, Cheng Xu and Seung-Chan Kim.