Octopocus

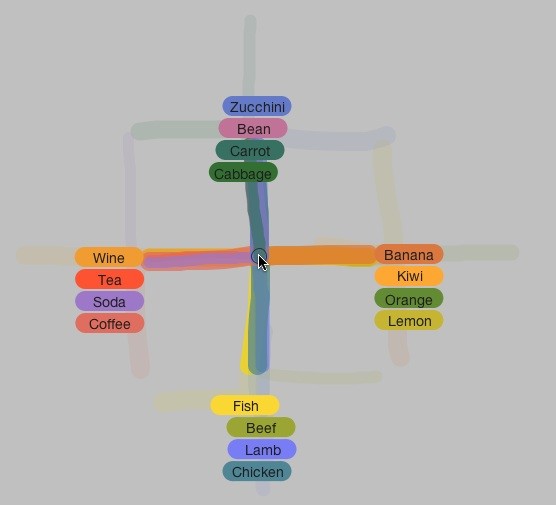

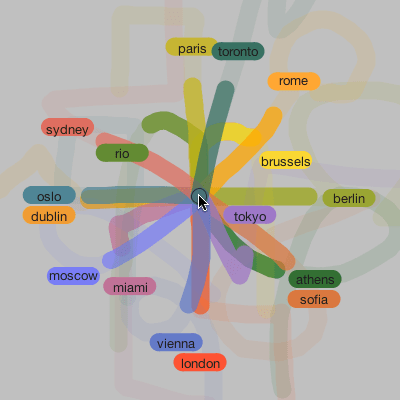

OctoPocus is a dynamic guide that combines on-screen feedforward and feedback to help users learn, execute and remember gesture sets. OctoPocus can be applied to a wide range of gesture sets, from simple, direction-only marks to arbitrarily complex gestures. It can be used with almost any recognition algorithm, including both incremental and non-incremental strategies. OctoPocus helps users progress rapidly from novice to expert performance in a playful, yet efficient, way.

How does Octopocus work?

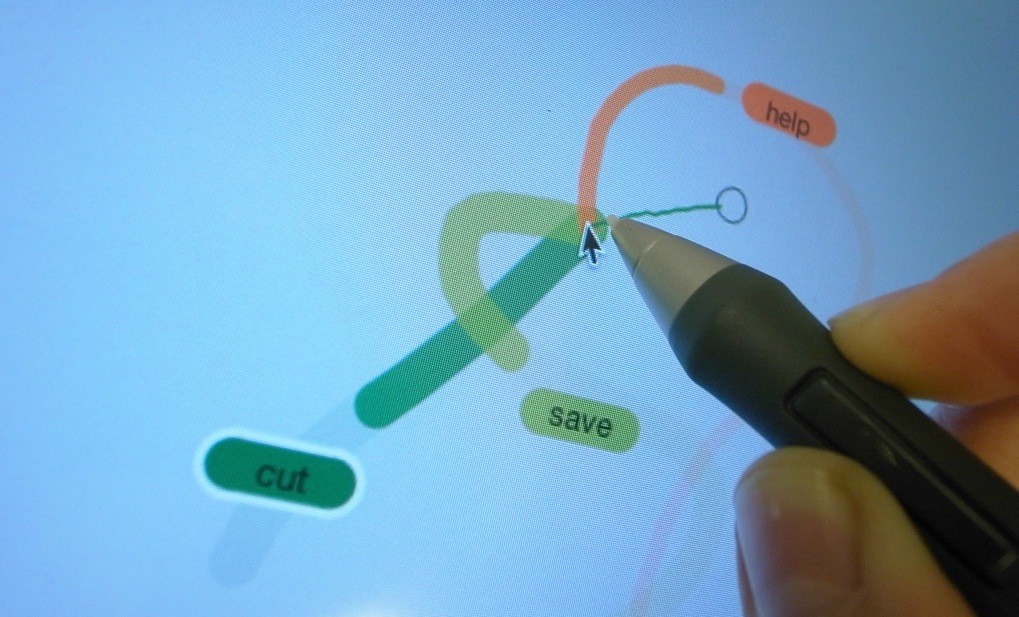

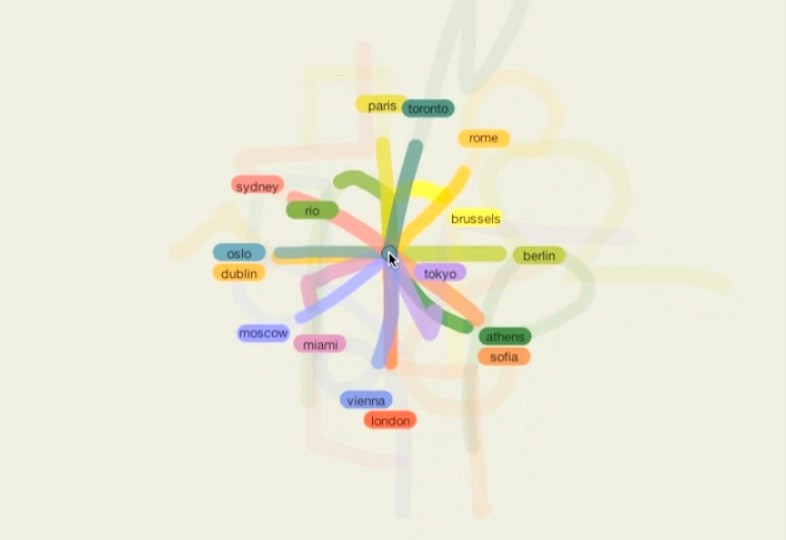

OctoPocus ‘ feedforward mechanism guides user’s input. It displays a template that represents a “perfect” gesture for each gesture class OctoPocus only highlights the prefix of each template to reduce visual complexity.

First, to invoke the print command, the user has to identify the guiding path associated with the command (Left). As the user begins to follow the path associated with a command, its guiding prefix is continuously updated (Middle Figure), until the user reaches the end (Right Figure). When the user releases the Mouse, the gesture has been completed and the associated command is triggered.

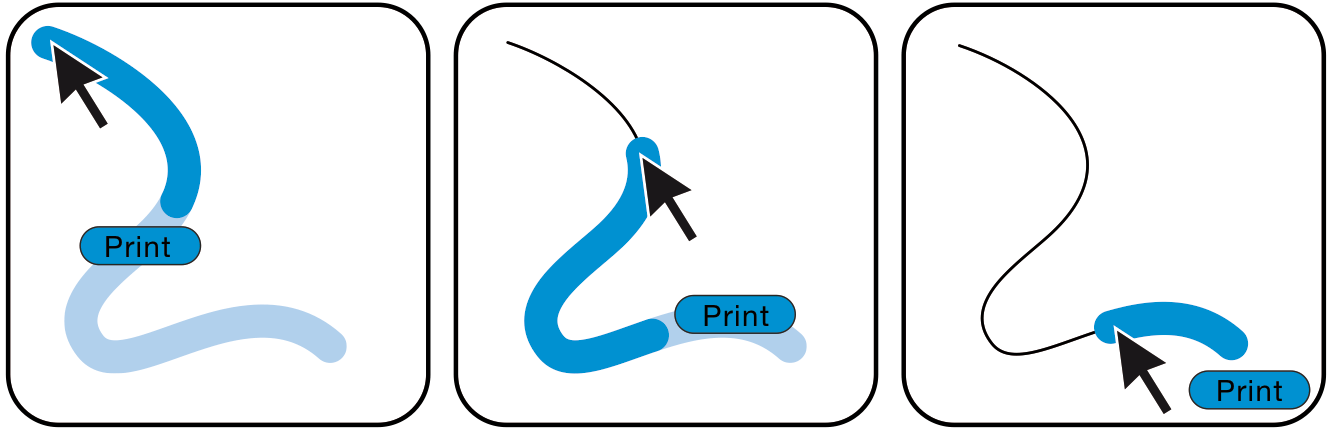

The Feedback provided by OctoPocus gives information about how the current input is interpreted. This Feedback is also continuously updated while the user is drawing a gesture. The thickness of each guiding template is mapped to the error the user can consume before a given gesture cannot be recognized anymore.

When OctoPocus appears all paths have the same thickness (Left Figure). As the user begins to follow the yellow path (Middle Figure), the green one becomes thinner as recognition becomes unlikely. Finally, the green path may disappear as recognition becomes impossible (Right Figure).

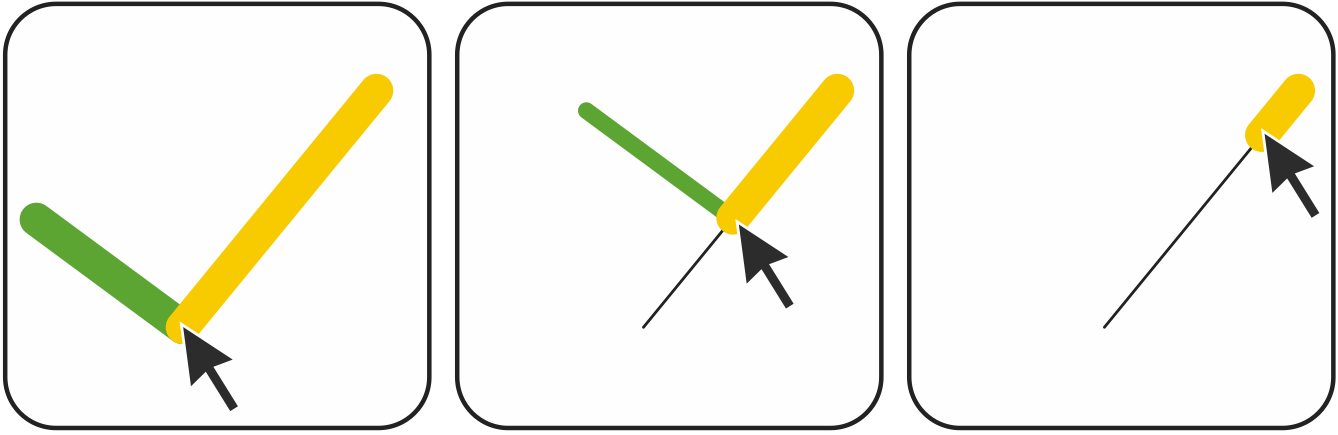

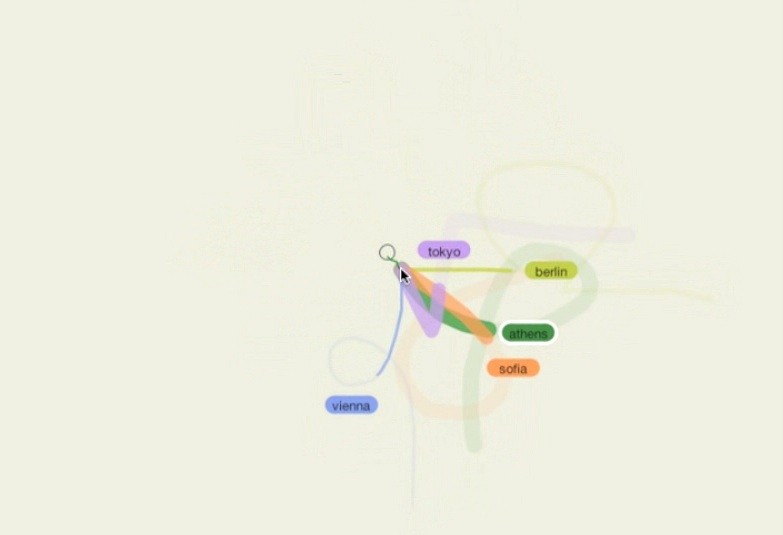

OctoPocus can be applied to a wide range of incremental, and non-incremental gesture recognition algorithms. To compute the distance and classification of an incomplete input while the user is drawing a gesture, we choose the most representative template of a given gesture class (Left Figure). We then subtract a prefix of the length of the user’s input from the full template T, resulting in a sub-template subT (Middle Figure). We then concatenate the user’s current input stroke with sub-template subT (Left Figure). The resulting shape perfT is the user’s completed input together with a perfect drawing for a given class. The resulting gesture can then be used as input by the recognition algorithm.

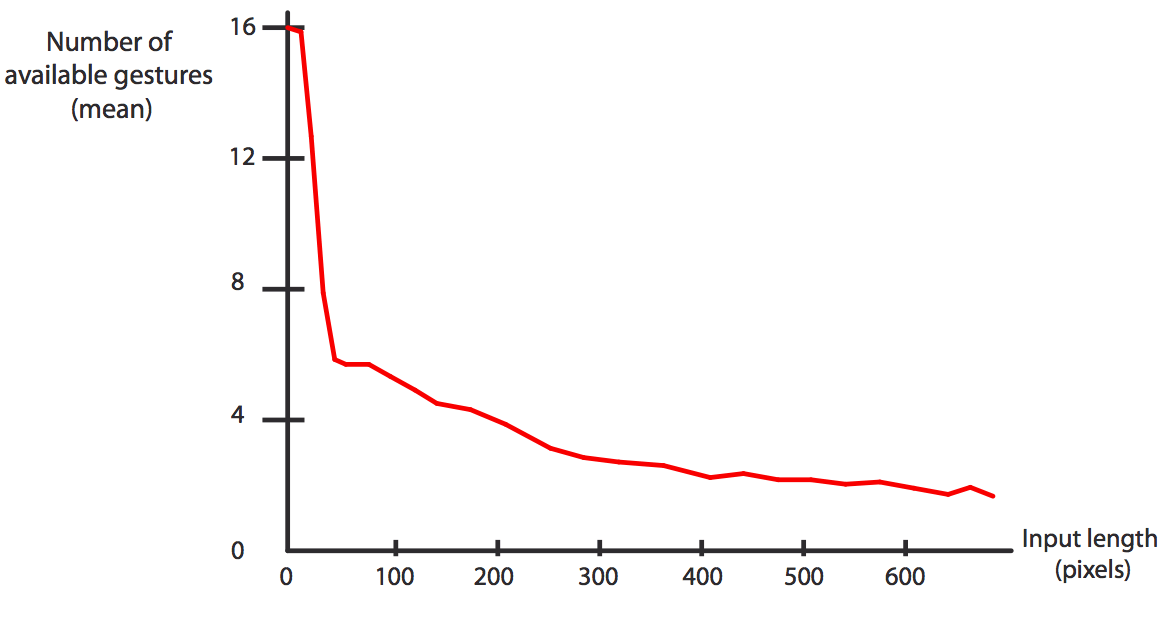

One concern is that OctoPocus can be visually complex if the gesture set contains too many commands. In this example, OctopPocus is applied to 16 different gestures. The graph shows the length of input in pixels on the X axis, and the number of remaining gestures displayed around the cursor on the Y axis. As we see here, after an input of only 50 pix., the number of available gestures drops to about 6 remaining paths around the cursor.

What are the applications of Octopocus?

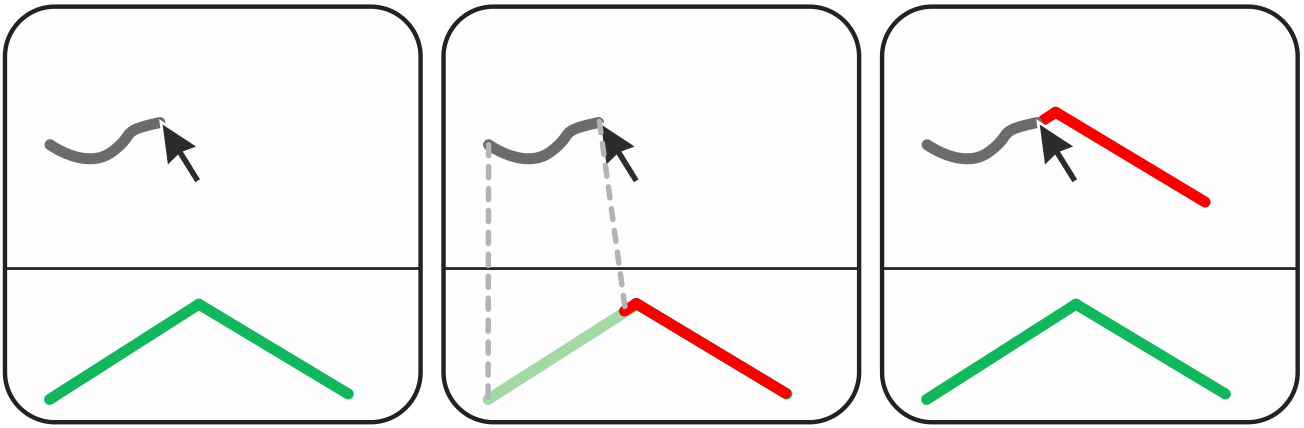

OctoPocus can be applied to a wide range of gesture sets. This includes simple, direction-only marks, e.g. marking menus such as the ones used in AutoDesk’s Maya, and arbitrarily complex gestures, e.g., Android gestures.

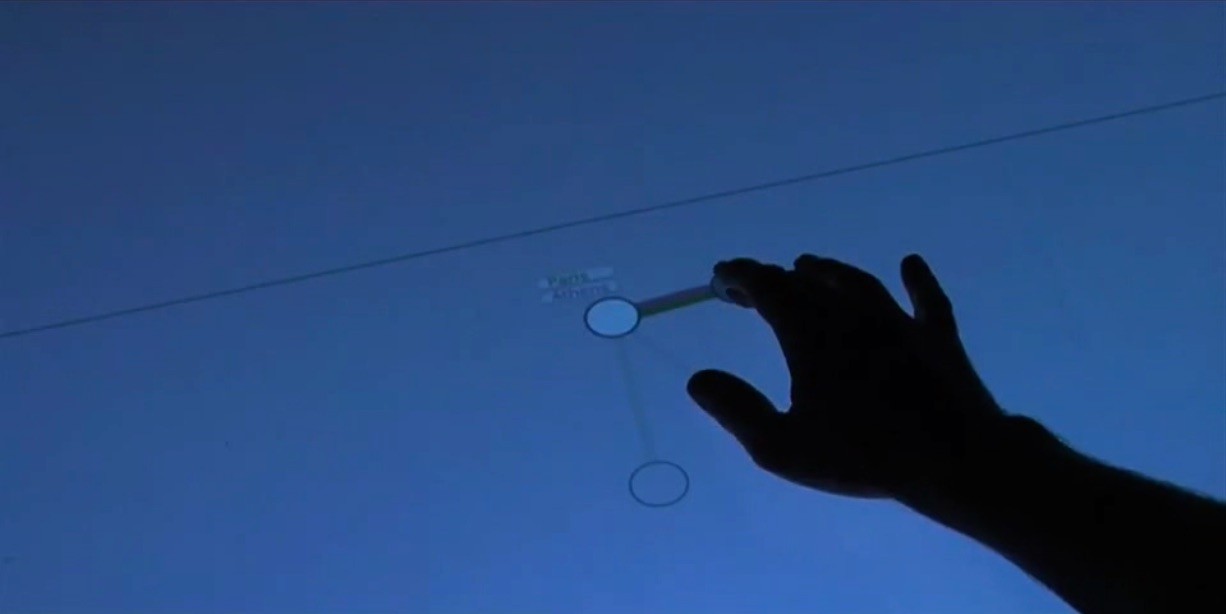

The principle of OctoPocus can also be applied to help users learn, execute and remember a wide range of gesture types in a playful and efficient way. Chord gestures, for example, consist of simultaneous placement of multiple fingers on a touch-surface. These gestures are inspired by piano chords and stenographs. The use of such gestures to interact with computers was first introduced by Engelbart in his 1968 Demo, along with the mouse. Chord gestures are a very efficient way to interact with a computer, but suffer from a steep learning curve. Arpege, which builds upon OctoPocus ‘ principles, helps users learn and execute chord gestures. More information can be found on the dedicated project page.

Publications

OctoPocus: A Dynamic Guide for Learning Gesture-Based Command Sets.

O. Bau and W. Mackay. 2008. In Proc. of UIST ’08. ACM. [ACM]

Scale Detection for a priori Gesture Recognition.

C. Appert and O. Bau. 2010.. In Proc. of CHI ’10. ACM. [ACM]